Let’s not fake that is enterprise as common. The second we invited AI to hitch our content material groups—ghostwriters with silicon souls, tireless illustrators, educating assistants who by no means sleep—we additionally opened the door to a number of questions which are greater than technical. They’re moral. Authorized. Human. And more and more, pressing.

In company studying, advertising and marketing, buyer training, and past, generative AI instruments are reshaping how content material will get made. However for each hour saved, a query lingers within the margins: “Are we certain that is okay?” Not simply efficient—however lawful, equitable, and aligned with the values we declare to champion. These are concepts that I discover every day now as I work with Adobe’s Digital Studying Software program groups, growing instruments for company coaching, like Adobe Studying Supervisor, Adobe Captivate and Adobe Join.

This text explores 4 large questions that each group needs to be wrestling with proper now, together with some real-world examples and steering on what accountable coverage would possibly appear to be on this courageous new content material panorama.

1. What Are the Moral Considerations Round AI-Generated Content material?

AI is a formidable mimic. It might probably end up fluent courseware, intelligent quizzes, and eerily on-brand product copy. However that fluency is educated on the bones of the web: an unlimited, typically ugly fossil report of all the pieces we’ve ever revealed on-line.

Meaning AI can—and infrequently does—mirror again our worst assumptions:

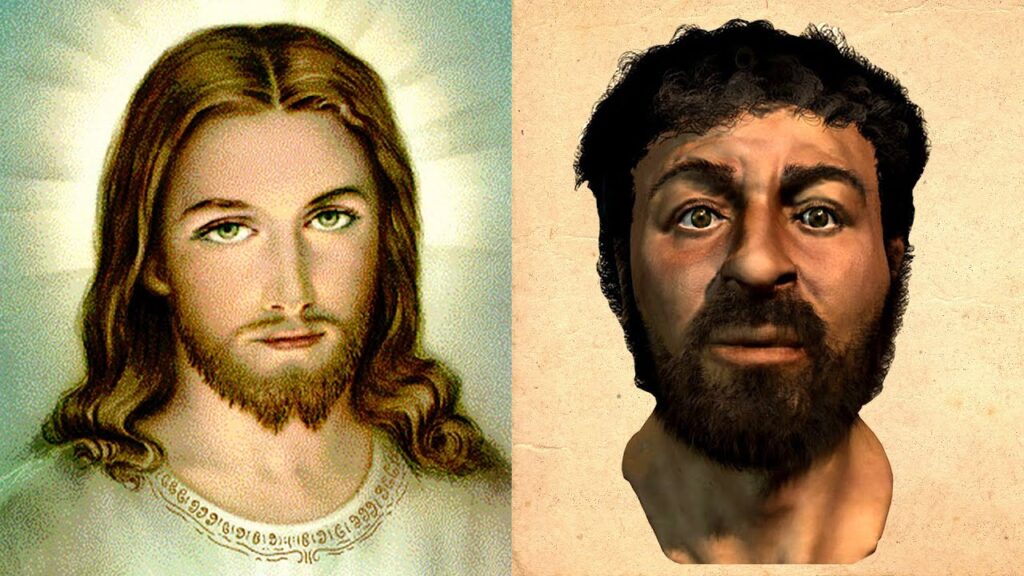

- A hiring module that downranks resumes with non-Western names.

- A healthcare chatbot that assumes whiteness is the default affected person profile.

- A coaching slide that reinforces gender stereotypes as a result of, nicely, “the information mentioned so.”

In 2023, The Washington Publish and Algorithmic Justice League discovered that fashionable generative AI platforms incessantly produced biased imagery when prompted with skilled roles—suggesting that AI doesn’t simply replicate bias, it might reinforce it with horrifying fluency (Harwell).

Then there’s the murky query of authorship. If an AI wrote your onboarding module, who owns it? And may your learners be informed that the nice and cozy, human-sounding coach of their suggestions app is definitely only a good echo?

Greatest apply? Organizations ought to deal with transparency as a primary precept. Label AI-created content material. Overview it with human SMEs. Make bias detection a part of your QA guidelines. Assume AI has moral blind spots—as a result of it does.

2. How Do We Keep Legally Clear When AI Writes Our Content material?

The authorized fog round AI-generated content material is, at greatest, thickening. Copyright points are notably treacherous. Generative AI instruments, educated on scraped net information, can by chance reproduce copyrighted phrases, formatting, or imagery with out attribution.

A 2023 lawsuit towards OpenAI and Microsoft by The New York Occasions exemplified the priority: some AI outputs included near-verbatim excerpts from paywalled articles (Goldman).

That very same threat applies to tutorial content material, buyer documentation, and advertising and marketing belongings.

However copyright isn’t the one hazard:

- In regulated industries (e.g., prescription drugs, finance), AI-generated supplies should align with up-to-date regulatory necessities. A chatbot that provides outdated recommendation might set off compliance violations.

- If AI invents a persona or state of affairs too carefully resembling an actual particular person or competitor, you might end up flirting with defamation.

Greatest apply?

- Use enterprise AI platforms that clearly state what coaching information they use and supply indemnification.

- Audit outputs in delicate contexts.

- Maintain a human within the loop when authorized threat is on the desk.

3. What About Knowledge Privateness? How Do We Keep away from Exposing Delicate Info?

In company contexts, content material usually begins with delicate information: buyer suggestions, worker insights, product roadmaps. In the event you’re utilizing a consumer-grade AI device and paste that information right into a immediate—you will have simply made it a part of the mannequin’s studying eternally.

OpenAI, as an illustration, needed to make clear that information entered into ChatGPT might be used to retrain fashions—except customers opted out or used a paid enterprise plan with stricter safeguards (Heaven).

Dangers aren’t restricted to inputs. AI may also output info it has “memorized” in case your org’s information was ever a part of its coaching set, even not directly. For instance, one safety researcher discovered ChatGPT providing up inner Amazon code snippets when requested the precise manner.

Greatest apply?

- Use AI instruments that assist personal deployment (on-premise or VPC).

- Apply role-based entry controls to who can immediate what.

- Anonymize information earlier than sending it to any AI service.

- Educate staff: “Don’t paste something into AI you wouldn’t share on LinkedIn.”

4. What Form of AI Are We Really Utilizing—and Why Does It Matter?

Not all AI is created equal. And understanding which type you’re working with is crucial for threat planning.

Let’s type the deck:

- Generative AI creates new content material. It writes, attracts, narrates, codes. It’s essentially the most spectacular and most unstable class—vulnerable to hallucinations, copyright points, and moral landmines.

- Predictive AI appears to be like at information and forecasts developments—like which staff would possibly churn or which clients want assist.

- Classifying AI types issues into buckets—like tagging content material, segmenting learners, or prioritizing assist tickets.

- Conversational AI powers your chatbots, assist flows, and voice assistants. If unsupervised, it may well simply go off-script.

Every of those comes with completely different threat profiles and governance wants. However too many organizations deal with AI like a monolith—“we’re utilizing AI now”—with out asking: which type, for what goal, and below what controls?

Greatest apply?

- Match your AI device to the job, not the hype.

- Set completely different governance protocols for various classes.

- Prepare your L&D and authorized groups to know the distinction.

What Enterprise Leaders Are Really Saying

This isn’t only a theoretical train. Leaders are uneasy—and more and more vocal about it.

In a 2024 Gartner report, 71% of compliance executives cited “AI hallucinations” as a prime threat to their enterprise (Gartner).

In the meantime, 68% of CMOs surveyed by Adobe mentioned they have been “involved concerning the authorized publicity of AI-created advertising and marketing supplies” (Adobe).

Microsoft president Brad Smith described the present second as a name for “guardrails, not brakes”—urging firms to maneuver ahead however with deliberate constraints (Smith).

Salesforce, in its “Belief in AI” tips, publicly dedicated to by no means utilizing buyer information to coach generative AI fashions with out consent and constructed its personal Einstein GPT instruments to function inside safe environments (Salesforce).

The tone has shifted: from marvel to cautious. Executives need the productiveness, however not the lawsuits. They need artistic acceleration, with out reputational smash.

So What Ought to Corporations Really Do?

Let’s floor this whirlwind with a number of clear stakes within the floor.

- Develop an AI Use Coverage: Cowl acceptable instruments, information practices, assessment cycles, attribution requirements, and transparency expectations. Maintain it public, not buried in legalese.

- Section Danger by AI Sort: Deal with generative AI like a loaded paintball gun—enjoyable and colourful, however messy and doubtlessly painful. Wrap it in critiques, logs, and disclaimers.

- Set up a Overview and Attribution Workflow: Embody SMEs, authorized, DEI, and branding in any assessment course of for AI-generated coaching or customer-facing content material. Label AI involvement clearly.

- Spend money on Personal or Trusted AI Infrastructure: Enterprise LLMs, VPC deployments, or AI instruments with contractual ensures on information dealing with are value their weight in uptime.

- Educate Your Individuals: Host brown-bag classes, publish immediate guides, and embrace AI literacy in onboarding. In case your staff doesn’t know the dangers, they’re already uncovered.

In Abstract:

AI just isn’t going away. And truthfully? It shouldn’t. There’s magic in it—a dizzying potential to scale creativity, velocity, personalization, and perception.

However the worth of that magic is vigilance. Guardrails. The willingness to query each what we are able to construct and whether or not we must always.

So earlier than you let the robots write your onboarding module or design your subsequent slide deck, ask your self: who’s steering this ship? What’s at stake in the event that they get it fallacious? And what would it not appear to be if we constructed one thing highly effective—and accountable—on the similar time?

That’s the job now. Not simply constructing the longer term, however conserving it human.

Works Cited:

Adobe. “Advertising Executives & AI Readiness Survey.” Adobe, 2024, https://www.adobe.com/insights/ai-marketing-survey.html.

Gartner. “High Rising Dangers for Compliance Leaders.” Gartner, Q1 2024, https://www.gartner.com/en/paperwork/4741892.

Goldman, David. “New York Occasions Sues OpenAI and Microsoft Over Use of Copyrighted Work.” CNN, 27 Dec. 2023, https://www.cnn.com/2023/12/27/tech/nyt-sues-openai-microsoft/index.html.

Harwell, Drew. “AI Picture Mills Create Racial Biases When Prompted with Skilled Jobs.” The Washington Publish, 2023, https://www.washingtonpost.com/expertise/2023/03/15/ai-image-generators-bias/.

Heaven, Will Douglas. “ChatGPT Leaked Inner Amazon Code, Researcher Claims.” MIT Expertise Overview, 2023, https://www.technologyreview.com/2023/04/11/chatgpt-leaks-data-amazon-code/.

Salesforce. “AI Belief Rules.” Salesforce, 2024, https://www.salesforce.com/firm/news-press/tales/2024/ai-trust-principles/.

Smith, Brad. “AI Guardrails Not Brakes: Keynote Tackle.” Microsoft AI Regulation Summit, 2023, https://blogs.microsoft.com/weblog/2023/09/18/brad-smith-ai-guardrails-not-brakes/